JOE GIBBS POLITZ

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License. Linked resources may have their own licenses, refer to them for details.

Code Tracing in Video Exams

Joint work with Rachel Lim and Mia Minnes.

I’ve been using video exams for a few years. The basic idea is that we have students record screencasts of themselves tracing a program they wrote, and submit that video as (part of) their “exam.” We grade them on the accuracy of their traces alongside typical automatic and manual grading of their code. Here’s the kind of prompt we give:

averageWithoutLowest which

takes an array of doubles and returns the average (mean) of them,

leaving out the lowest number.averageWithoutLowest with an

array of at length 4 where the lowest element is not the first or

last element in the array. Write down a trace table corresponding

to one loop you wrote in the body of the method. Pre-write the header row of the

table, but you must fill in the contents of the table on the video. As you fill

in the “end” value for each variable, indicate which statement(s) in the program

caused its value to change.It turns out there are different ways students do this; here’s two different choices they made – both “correct” answers but in different orders:

Do you see the difference? One is following the evaluation order of Java very precisely, and the other groups the variable updates the way they happen to be written out in order in the table.

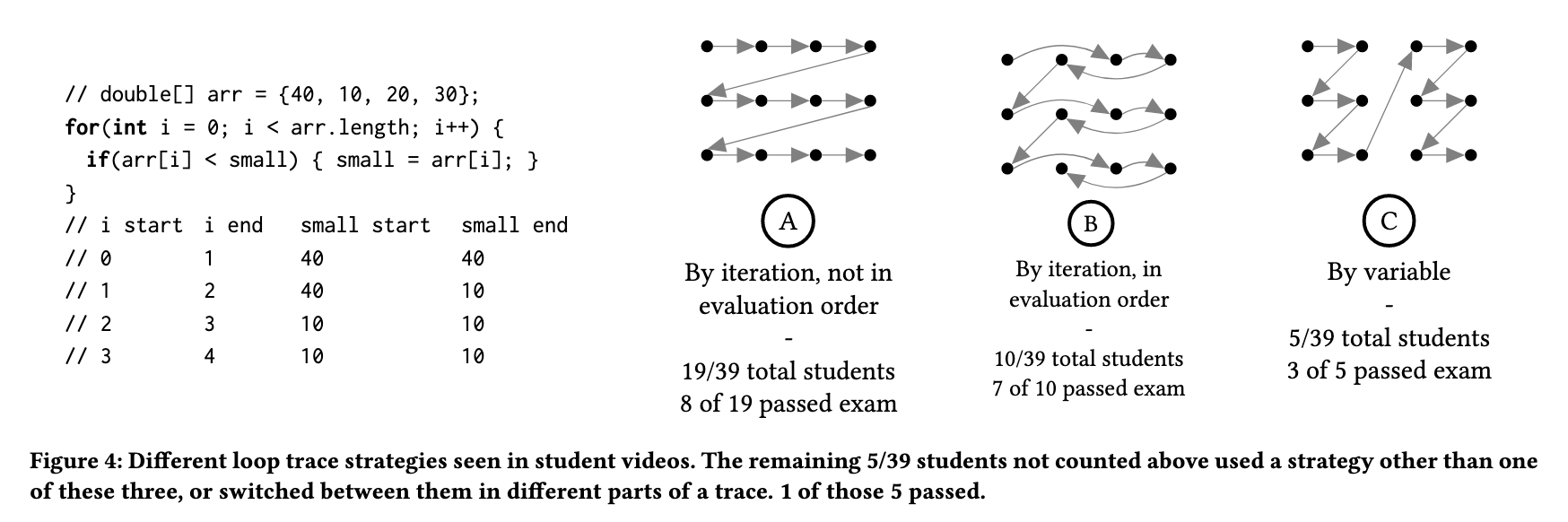

Now, I wasn’t thinking particularly hard about loop trace ordering beyond the per-iteration tabular setup when I assigned this question. But it turns out it’s pretty interesting to look at how students approach this. Here’s a key figure from the SIGCSE 2023 paper we wrote analyzing these videos:

We identified three different orderings that most students in our sample used to fill in the traces, illustrated by the arrows on the right-hand side of the figure. Order B is the one that most closely matches semantics of Java, where the student filled in each value in the table in the same order the variables would update in memory. Order A and Order C follow a pattern for each variable that works for this example but could be incorrect for some kinds of interleaved updates of variables (see section 4.1 in the paper).

Interestingly, the choice of ordering wasn’t a factor in our rubric, but it does seem to have some relationship to students’ performance. Of the different strategies (A, B, C, and other), it was only for ordering A that a majority of students did not pass the exam in our sample. So the choice of ordering, which represents something about students’ choice or ability to match Java’s semantics closely in their trace, could be a signal related to overall performance.

This is pretty cool – I can already see ways to write questions that get at precisely the differences between these traces, and how to give students some direct instruction and examples that distinguish them. This is just one result from a set of questions we had about what we can learn from these exams, including:

Of course, we’re far from the first to notice interesting misconceptions from student program traces! What I’m excited about is getting this kind of information as a natural part of an assessment that’s reasonable to give in my class. The richness of video gives us a temporal dimension that turns out to have valuable signal about how students are understanding their programs. Check out the full paper for more on these questions, including analysis of another exam question with interesting misconceptions about recursion.